As our computing becomes more challenging & complex, so does the technology we use to solve the problems we encounter.

Take your cell phone for example. Mobile phones have evolved from devices for making phone calls, to handheld computers capable of making calls, to devices we can’t live without packed with hundreds of applications that are also capable of making phone calls and computing.

Now, they are on their way to becoming artificially intelligent devices that are also capable of making phone calls and computing. This is an incredible transformation that continues to increase the level of complexity at an alarming rate, one that we cannot afford to fall behind.

Not only phones, but all electronic systems are following the trend of more and more functionality in the same or smaller footprint. The reason this phenomenon is occurring is twofold: semiconductor transistors continue to get smaller, allowing chips to pack in more functions; and memories continue to get larger, permitting program files embedded in them to have more and more functions.

Mainstream for many digital electronic systems is the use of boundary-scan technology to help test the board for manufacturing defects of solder joints, chip placement and other issues. Additionally, boundary-scan technology is excellent for device in-system programming of memories, and this has been used for decades across many industries.

In combining the use of boundary scan for in-system programming and large embedded files, like for FPGA designs or system images, we have run into a problem. The problem is boundary scan’s architecture and inherent limit of using a test clock for moving data.

Most test clocks using boundary scan on a board run at 10mhz, sometimes less and sometimes more. That speed constraint and other factors limit data throughput using boundary scan for in-system programming. Thus, using boundary scan for in-system programming in manufacturing is great for smaller files and boot loaders, but large design or application files using boundary scan can cause performance issues.

In reviewing the market, we are seeing that when embedded files get larger than 10MB in size, there is a performance impact on programming memories, as the process takes hours of time. Most goals for in-system programming for a board are in minutes, to meet production time and cost goals. Thus, we have a dilemma: we want the great flexibility and cost savings that in-system programming delivers, but we need it to be fast.

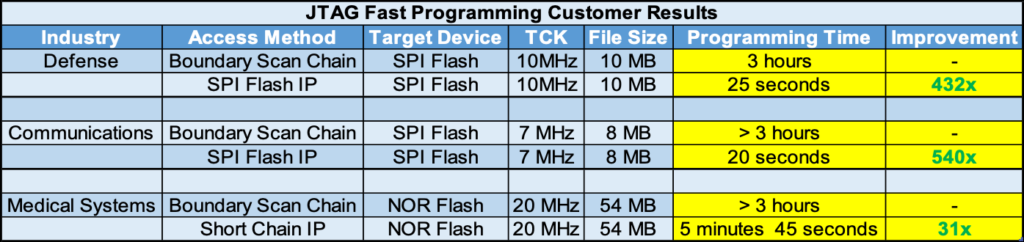

To solve the 10MB or greater file size performance issue, ASSET works closely with customers delivering special design IP that gets downloaded into an FPGA to achieve much faster programming times. The library that ASSET developed is flexible, supporting most FPGAs and most memories. One of those library elements is flexible and reduces most of the boundary scan overhead to achieve 10x or more performance improvement! Another element, designed specifically for SPI memories, is downloaded to the FPGA and uses the system clock to program memories at data sheet rates for top performance of 100x or more.

If you have files greater than 10MB, you are using boundary scan to program and need those programming hours to become minutes, ASSET has a solution for you and below are some customer examples of what we are talking about!

For more information on the technology behind these methods, see our webinar video on In-System Fast Flash Programming Technologies on how design and test can work together to achieve the significantly reduced programming time we mentioned in this blog.

Click link to view webinar recording: