While doing some low-level debugging using SourcePoint, I noticed some interesting and puzzling behavior of Intel E-cores (based upon Atom architecture) versus P-cores (based on Core architecture). It’s particularly noticeable on heterogeneous targets that support the two different types of cores, such as on the AAEON UP Xtreme i12 Alder Lake board. The difference is at a microarchitectural level, and affects hypervisor debug and reverse engineering.

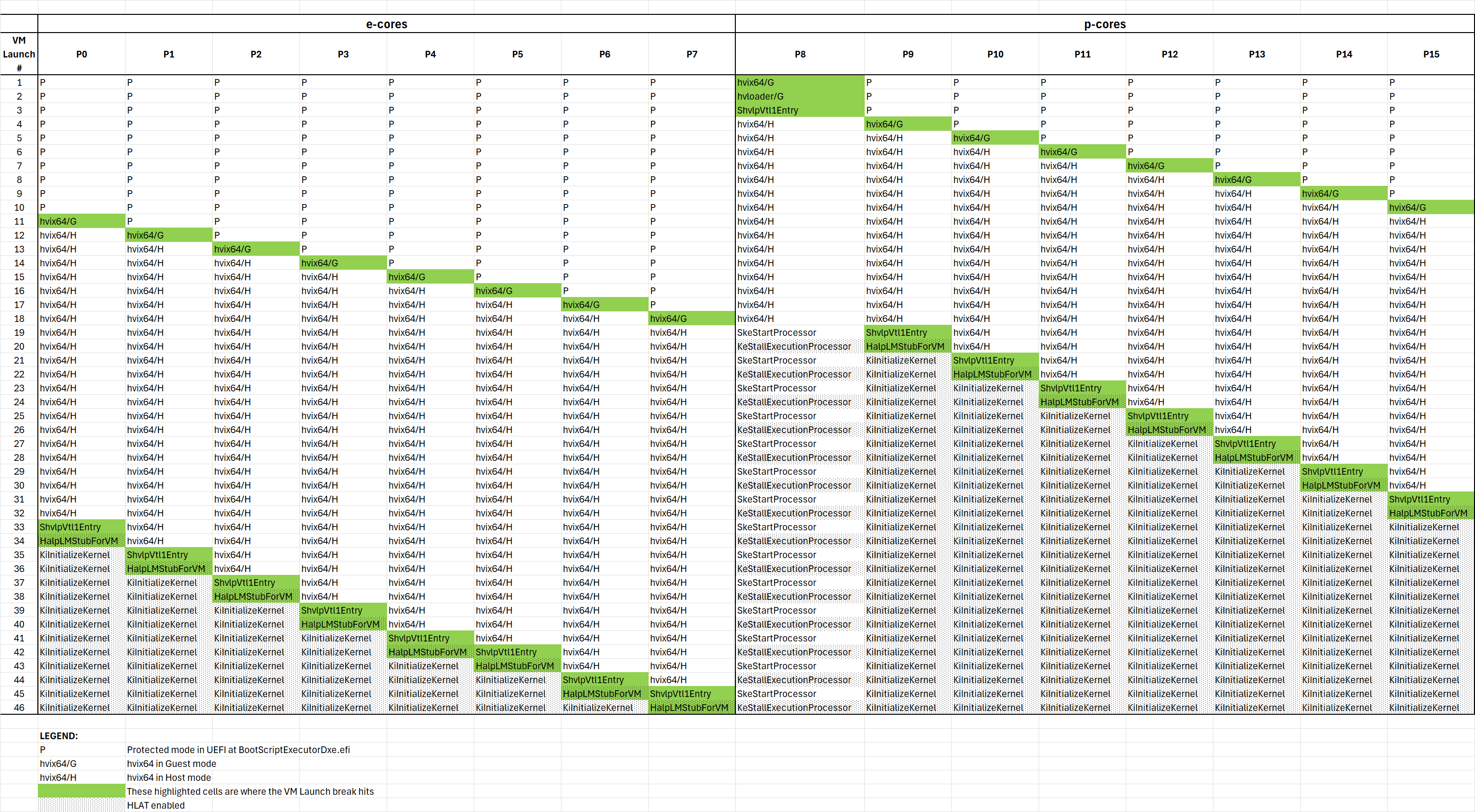

Let’s start by looking back at the behavior of heterogeneous targets as we halt at VM Launch breakpoints on Windows boot. Building on the work from my earlier blog, VT-rp, HLAT, and my AAEON Alder Lake Core i7-1270PE board: Part 3, the sequence of 46 VM launches yields the following profile:

You’ll probably have to expand the image in order to see it clearer. But, in a nutshell, here are the highlights of the boot process as it relates to VMX behavior.

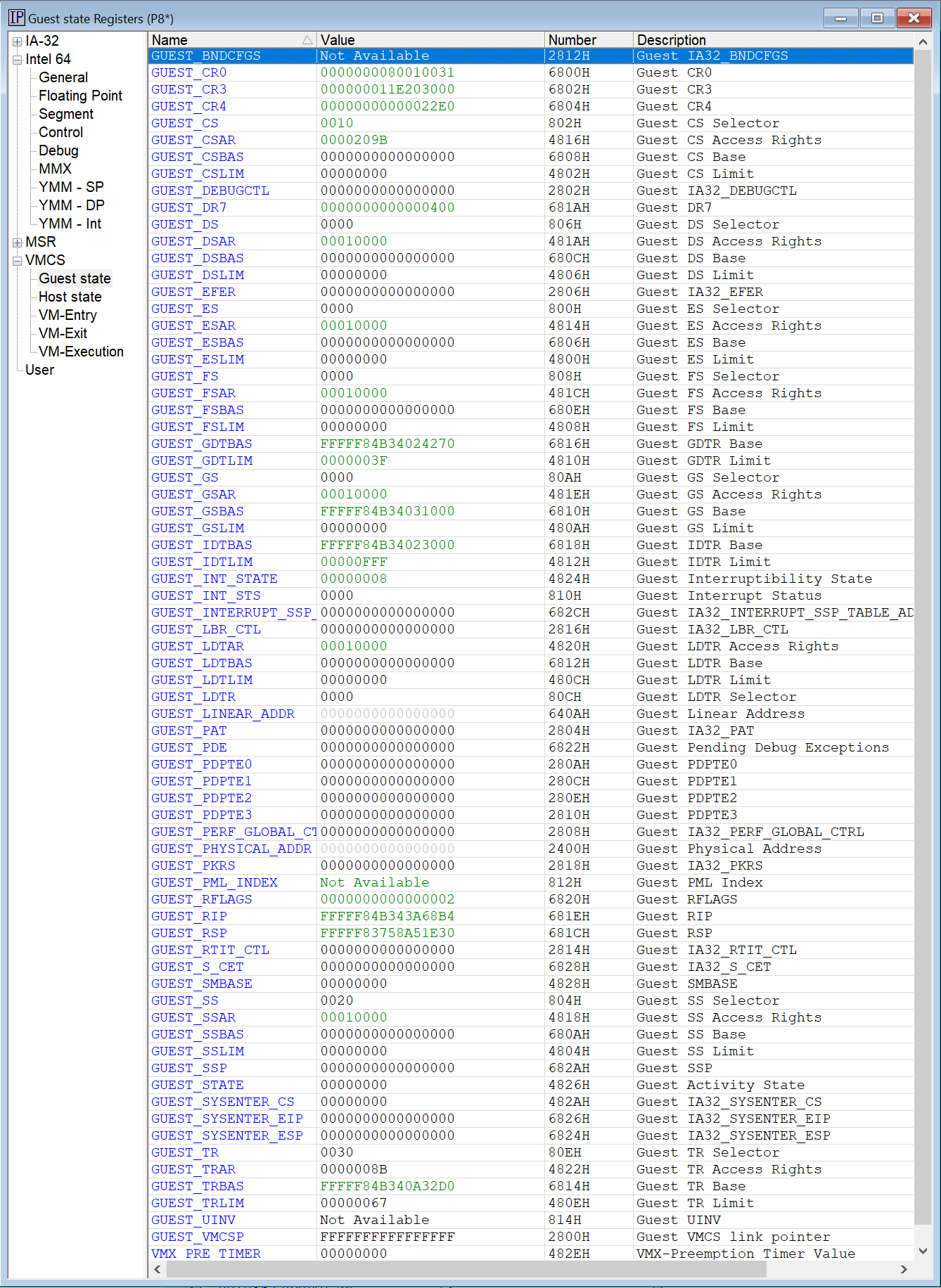

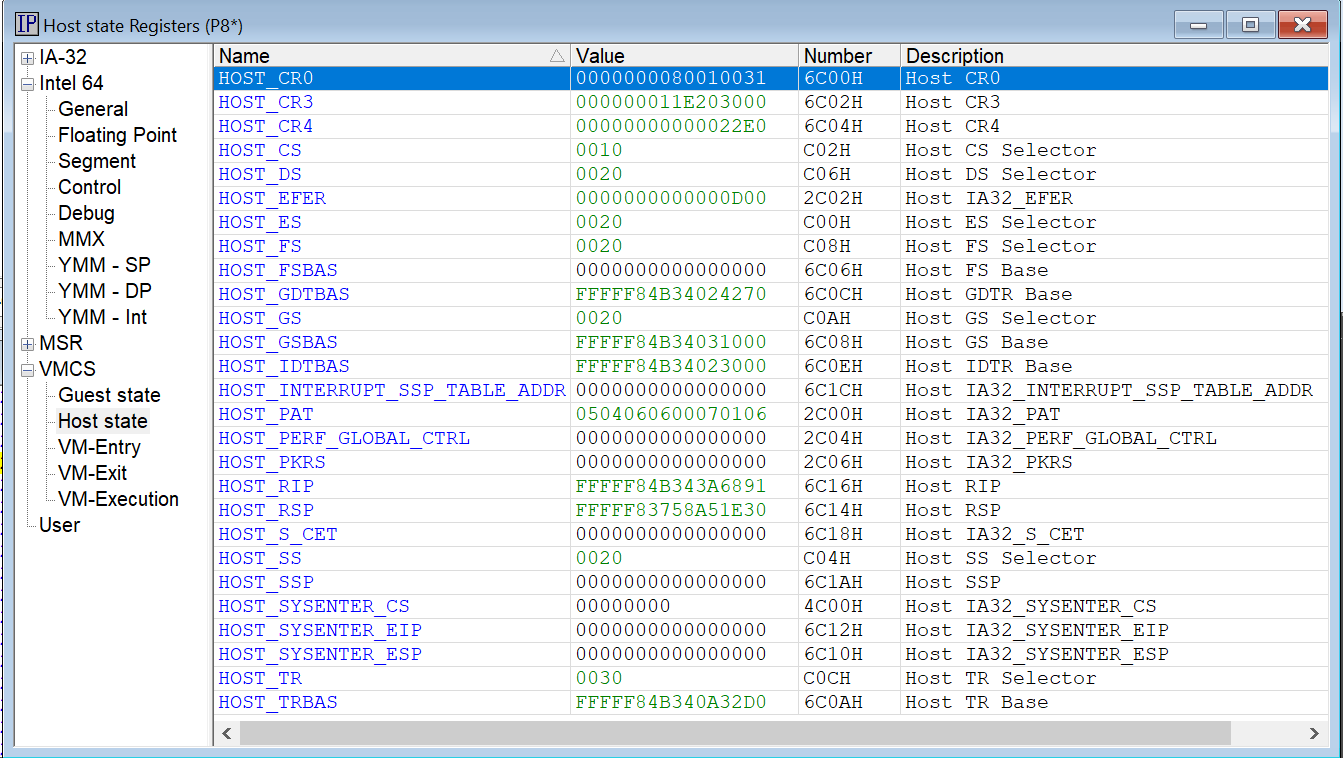

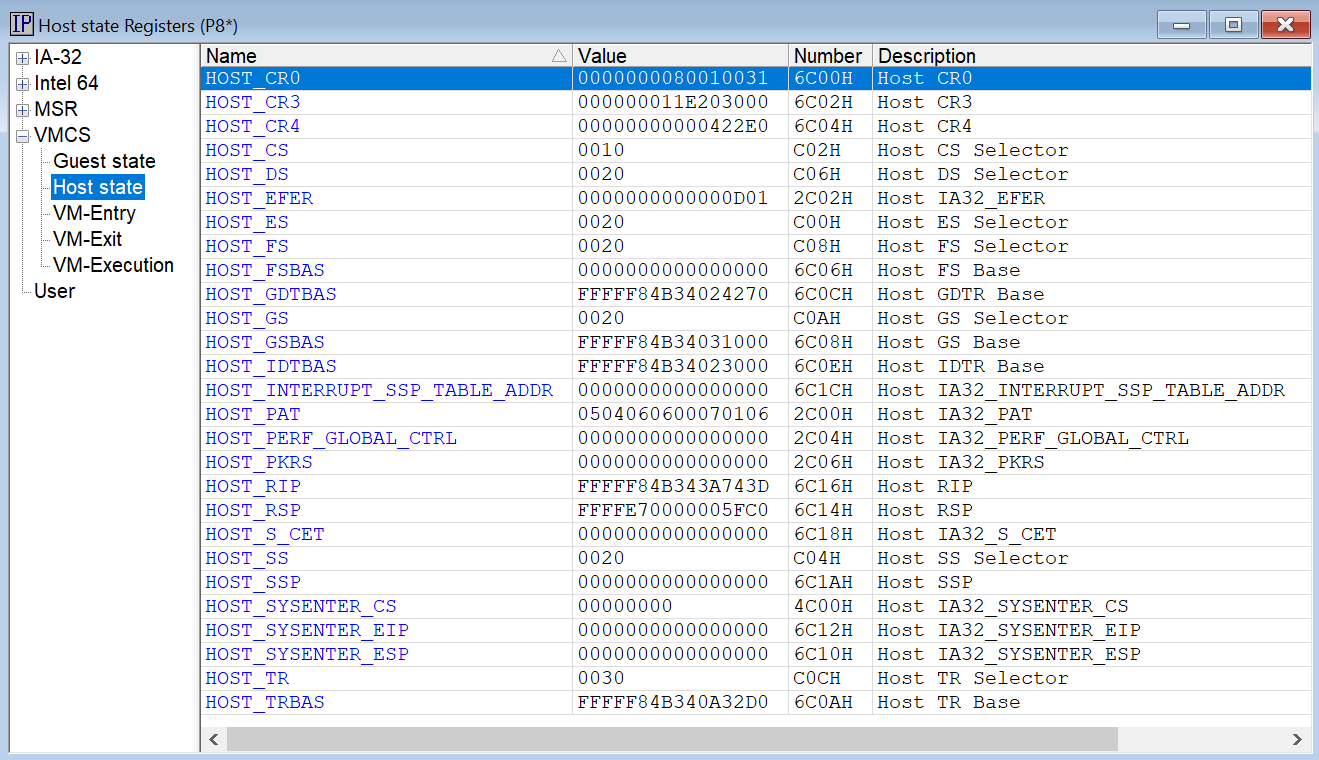

The first VM Launch lands in hvix64 (the Windows hypervisor) on P8, the first P-core, which is the boostrap processor (BSP). It is in Guest mode, of course (it is after all a VM Launch). With SourcePoint’s VMCS Viewer/Editor, you can see all of its VMCS fields; I’ll show the Guest state Registers and Host state Registers below:

The second VM Launch lands P8 in hvloader in Guest mode.

The third VM Launch lands P8 at the VTL1 entry point, in the ShvlpVtl1Entry function of the Secure Kernel. You’ll see that all other threads, both P-core and E-core, are in Protected mode at this point. For P8, the Guest state Registers and Host state Registers are as below:

Editor’s Note: It’s pretty amazing that SourcePoint can read the P-core Host mode state and memory while the processor is in the Secure Kernel in Guest mode!

The next series of VM Launches take each of the P-cores into hvix64 Guest mode, right after which they transition into hvix64 in Host mode. Then, on the 11th VM Launch, the first E-core, P0, becomes the active core and lands in hvix64 in Guest mode. Then a similar pattern to the P-cores occurs, until all the E-cores are in hvix64 in Host mode.

Starting with the 19th VM Launch, the bootstrap processor lands in the SkeStartProcessor function, and the actual VM Launch breakpoint is hit on P9, which enters VTL1 within the ShvlpVtl1Entry function.

You then see a repeating pattern whereby the bootstrap processor toggles between VTL1 (SkeStartProcessor) and VTL0 (KeStallExecutionProcessor). When in the VTL0 Normal Kernel, the processor has HLAT active. Note that VTL1 is at the root of trust, and has no kernel drivers, so it needn’t protect its own page tables with HLAT. Whereas VTL0 correctly has HLAT enabled.

All the P-cores in turn sequentially break in VTL1 (ShvlpVtl1Entry) and then VTL0 (HalpLMStubForVM).

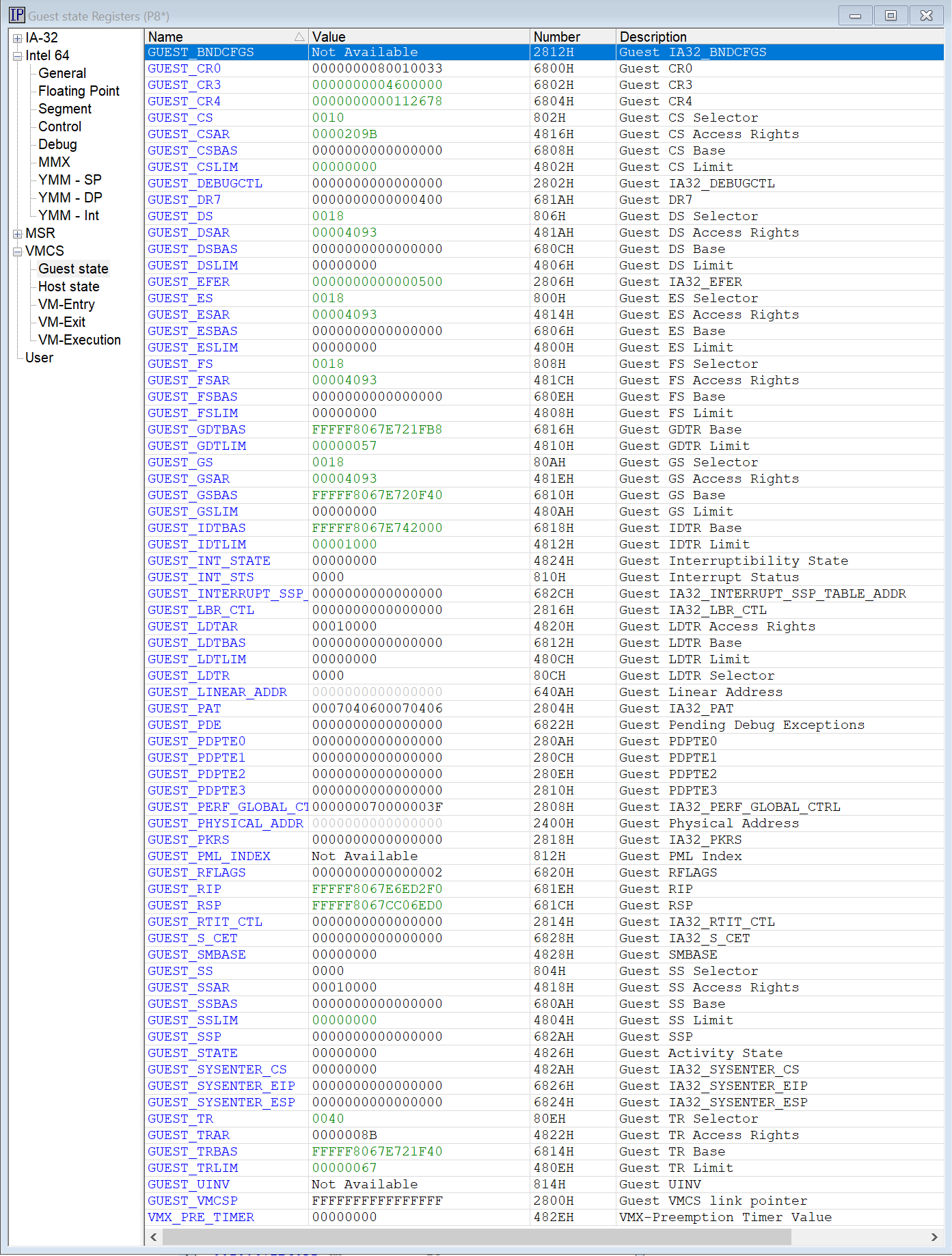

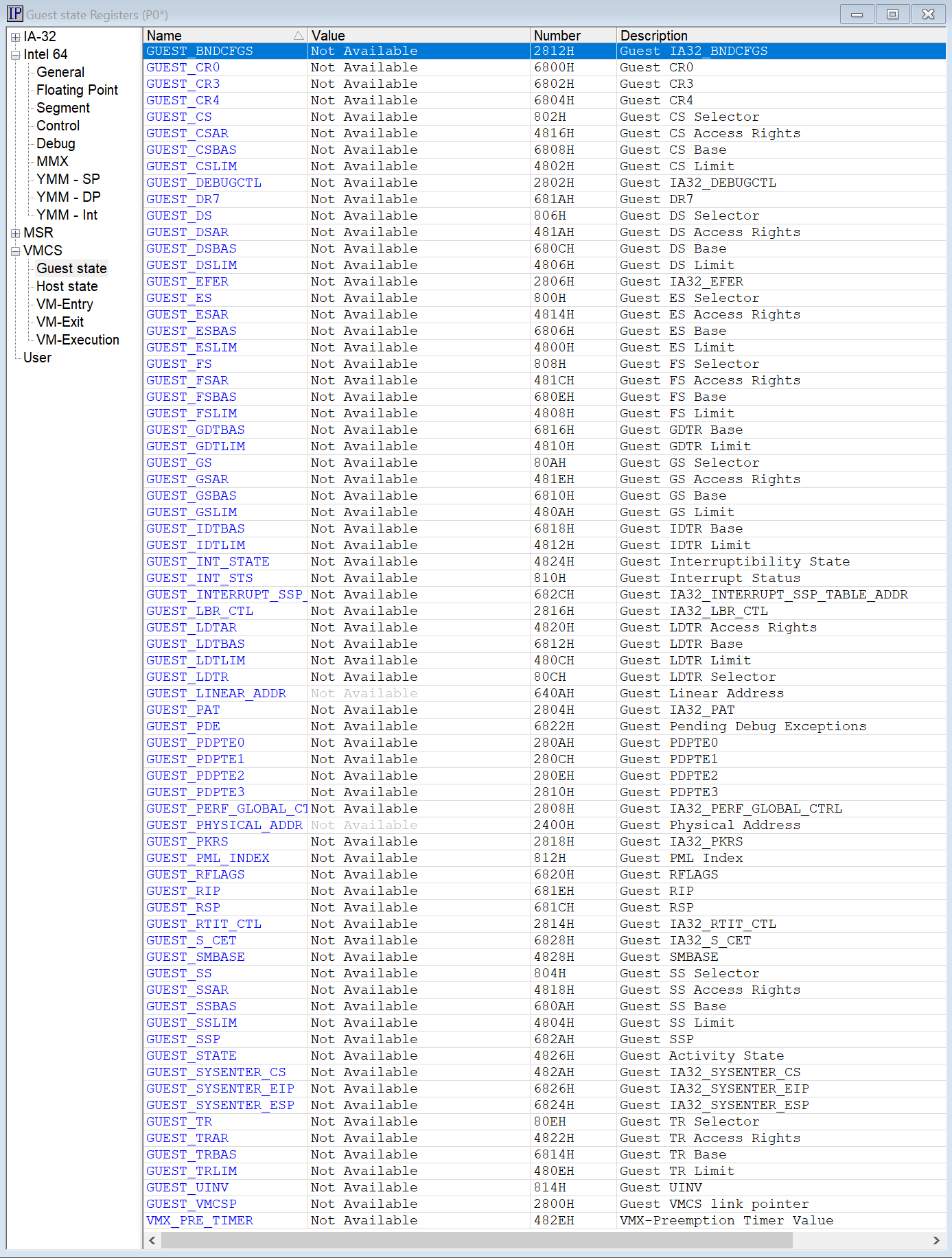

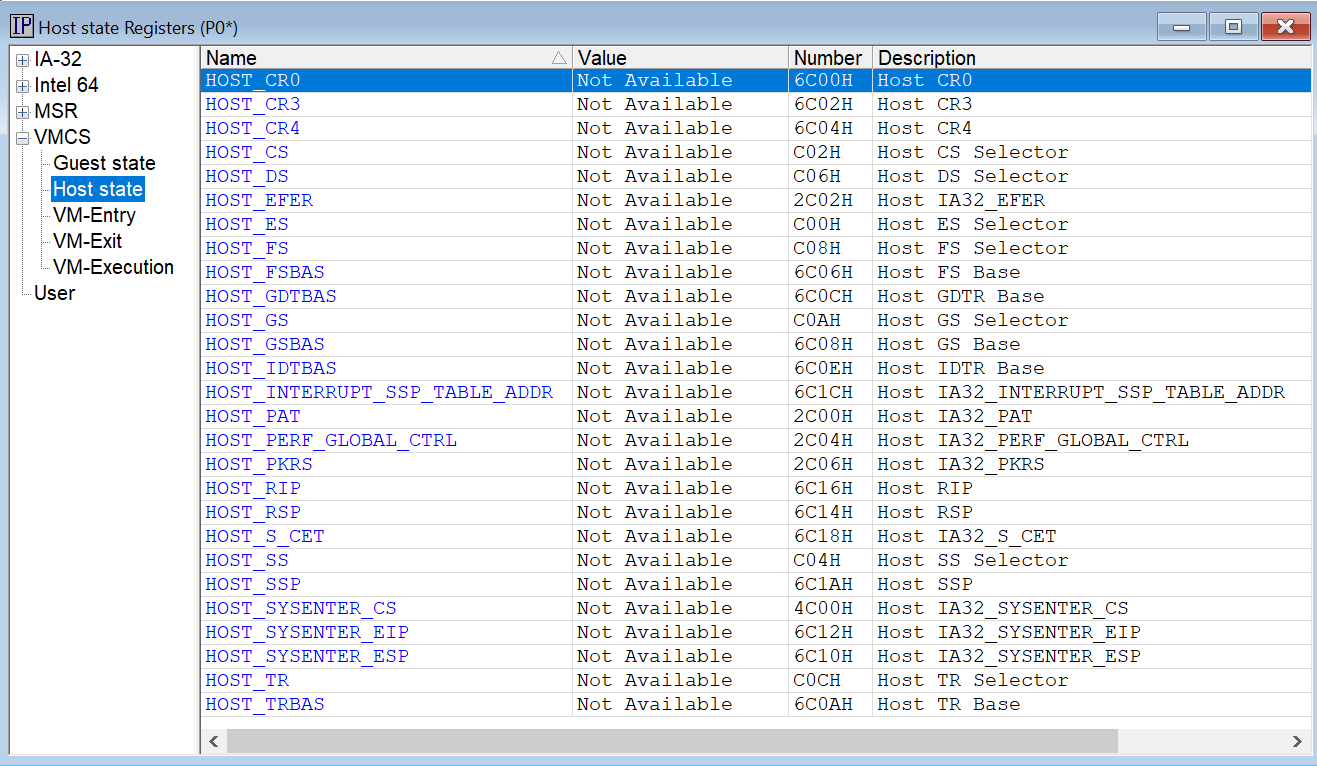

Things change upon the 33rd VM Launch. At this point, all the P-cores have been initialized and HLAT is enabled for each processor’s NT kernel, as shown in its VMCS. But you’ll see now it’s the E-cores’ turns to go through the same process, and P0 lands again in ShvlpVtl1Entry. But, when we look at the E-cores’ VMCS, we can’t read it, for neither Guest nor Host, and just display “Not Available”:

Strange, isn’t it? We need to dig into this.

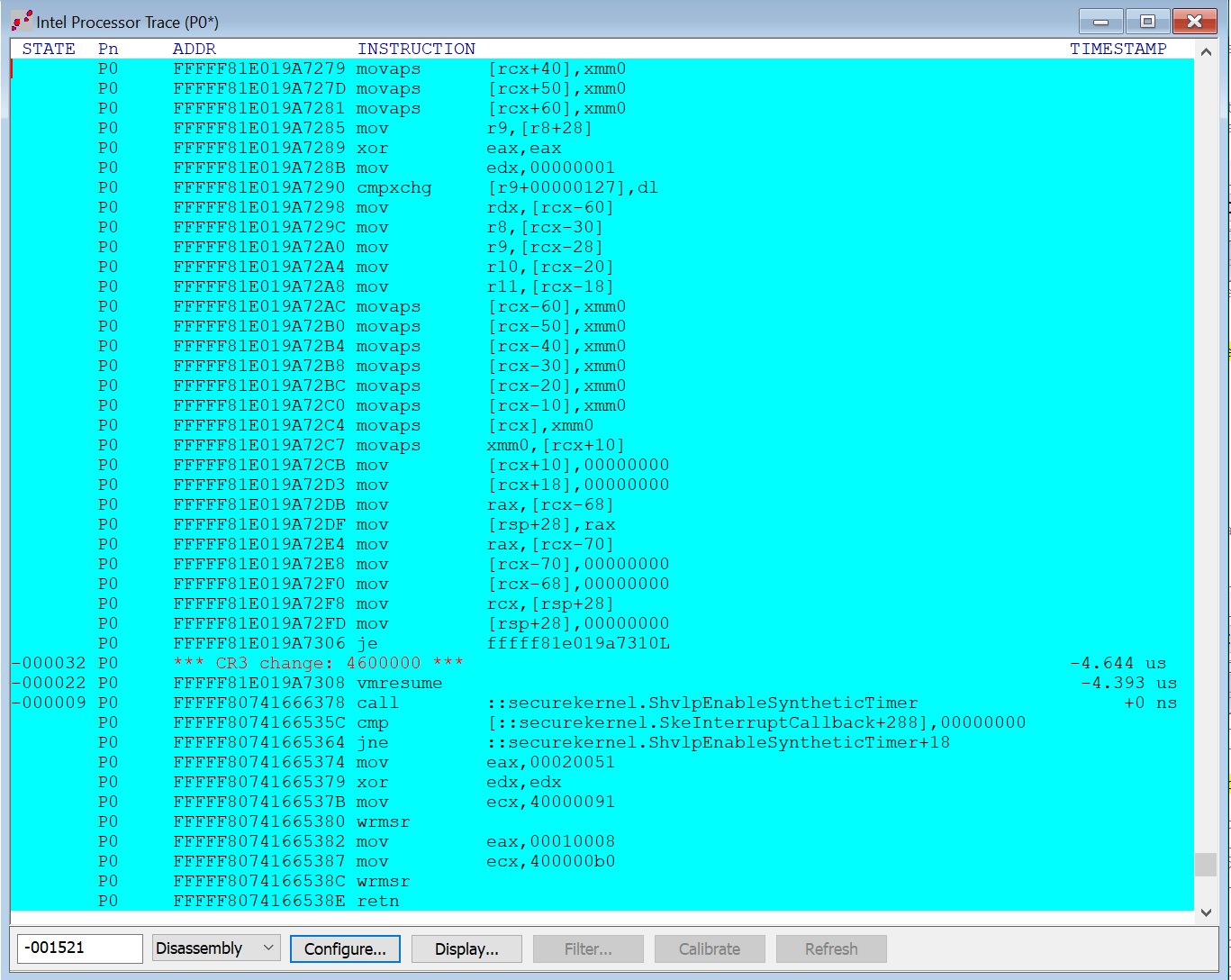

One other thing: this strange behavior is not related to SourcePoint’s almost magical ability to otherwise read Host state memory while in Guest mode. A good example of this is to use Intel Processor trace to capture execution trace associated with a VM Resume breakpoint on an E-core. To see this in action, when we break into ShvlpVtl1Entry on P0, and set a VM Resume breakpoint there, on the next Go we expect to do a VM Exit on P0, run some Host mode code, and then land back in the Secure Kernel, capturing all of the execution trace, for both Guest and Host modes. And this is what we see, in the below image. We’ve captured Host mode memory (instructions) while in Guest mode from the VM Resume:

Editor’s Note: the last 12 instructions after the VM Resume instruction above are actually artifacts that are executed initially after the Go, and that Intel Processor Trace spuriously repeats after the VM Resume breakpoint is actually hit. You can ignore them.

Note that to get this to work, SourcePoint has to clear the Intel Processor Trace Conceal bits upon the first VM entry into Host mode (thank you, Windows, for the mitigation). See my article for a further explanation: JTAG debug of Windows Hyper-V / Secure Kernel with WinDbg and EXDI: Part 4. So, there has to be a break into Host mode at some point on a processor for Intel PT to work.

Want to learn more? Check out my webinar recording for debugging Windows’ internals using JTAG.

And one last thing: I fed the full Intel Processor Trace data into ChatGPT, to see what it made of it. Although not perfect, the analysis is certainly informative, and you can beautify it further to get more insight:

The provided assembly trace captures Intel PT (Processor Trace) events during a VM Exit from the Secure Kernel. This suggests it’s monitoring a transition between a virtualized secure environment (VTL1) and the hypervisor or host system (VTL0).

Key Observations:

- VMCS Pointer Change

- The log begins with a VMCS (Virtual Machine Control Structure) pointer update, indicating a switch in VM state.

- Secure Kernel Functions

- ShvlpInitiateFastHypercall: This suggests a hypercall is being initiated, likely to communicate with the hypervisor.

- ShvlpInitializeSynic: This function is related to Hyper-V’s Synthetic Interrupt Controller (SynIC) setup, possibly configuring virtualized interrupts.

- Security Checks

- _security_check_cookie: Multiple security cookie checks are performed, which is a standard buffer overflow protection mechanism used by Windows.

- This implies that secure control flow integrity is being enforced during this transition.

- Register and Stack Operations

- MOV and XOR instructions suggest stack frame validation (e.g., xor rcx, rsp may be used to detect stack tampering).

- RET and JMP instructions confirm normal function unwinding and control flow redirection.

What is Happening?

- A VM Exit occurs, and the system updates the VMCS pointer.

- The Secure Kernel performs a hypercall (ShvlpInitiateFastHypercall), likely requesting a privileged operation.

- Various security checks are executed to prevent buffer overflows and unauthorized execution.

- The system may be handling an interrupt or exception, initializing ShvlpInitializeSynic.

- Control is returned to the hypervisor or host environment.

Possible Use Cases:

- Debugging Secure Kernel interactions with Hyper-V.

- Monitoring virtualization security mechanisms.

- Analyzing VM Exit reasons (e.g., privileged instruction execution, interrupt handling).